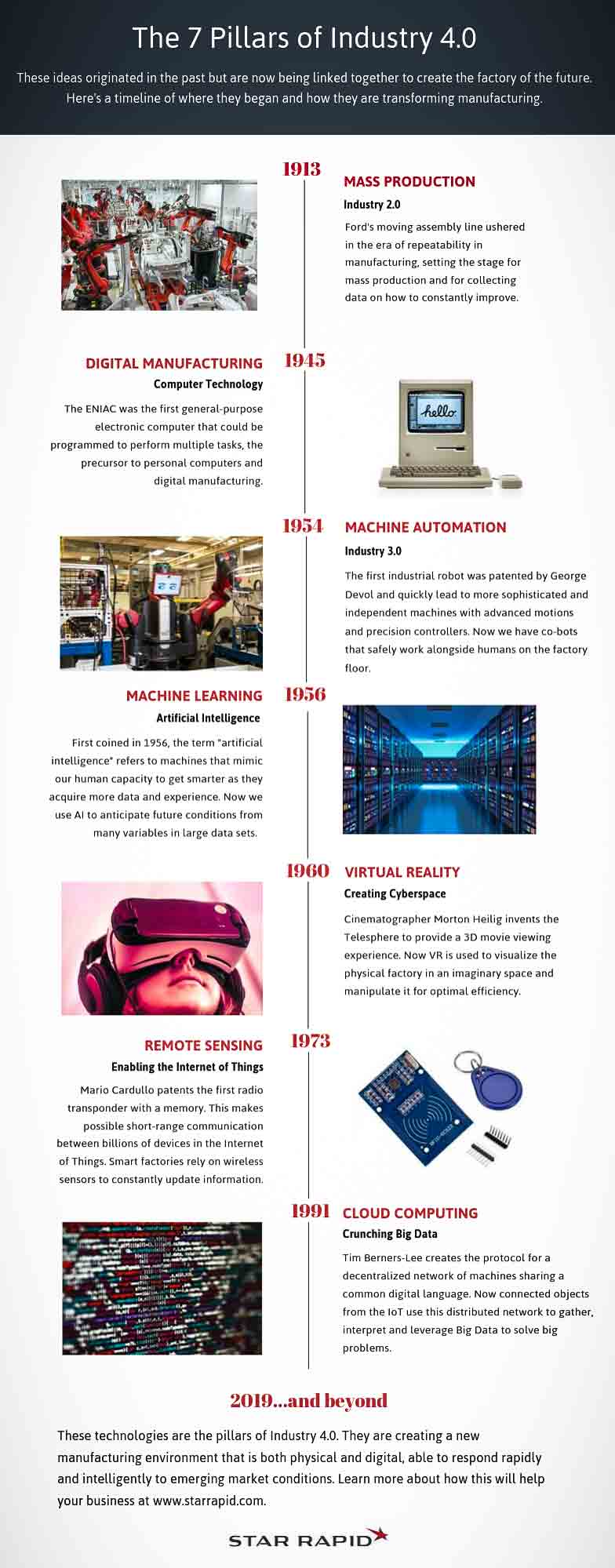

Industry 4.0 represents the next generation of manufacturing for an inter-connected world. To empower it, several discrete technologies and processes are being brought together to form a new ecosystem that will respond independently and automatically to external conditions. This saves time, money, energy and natural resources while producing goods of superior quality exactly when and where needed. Here’s a look at the 7 core features of Industry 4.0 and what they mean for the factory of today and tomorrow.

Mass Production – 1913

Henry Ford is credited with instituting the systematic and repeatable production of a single item on a moving assembly line. This brought the time needed to make a car down from 12 hours to 2 1/2, a massive productivity gain that set the standard for mass production that is still being improved upon today. Industry 2.0 begins with mass production.

Digital Manufacturing – 1945

Although electrically-operated computing devices had been built before this, each of the earlier prototypes was limited to performing a single routine function over and over again, based on very simple operating instructions. The ENIAC was the first computer whose function was not pre-determined, but could be altered by the operator to perform a variety of computing tasks. This is the beginning of the digital age, bringing us the computers we use every day to monitor and control all business activities.

Machine Automation – 1954

Inventor George Devol’s original idea for a powered, articulated armature was coined a “robot” after Isaac Asimov’s sci-fi stories. The first such machine was named Unimate #001, and was installed at a GM auto factory to help make automotive die castings. The robot revolution and machine automation is considered to be the foundation of Industry 3.0.

Machine Learning – 1956

The concept of artificial intelligence as an academic discipline started at Dartmouth College in the mid-50s. The task of making an electronic brain would turn out to be much more formidable than first imagined. Computer architecture is now mimicking that of humans, with massive parallel processors able to share information among themselves using free association to create new concepts – i.e., thinking.

Virtual Reality – 1960

The first head-mounted display was patented by Morton Heilig to give film viewers the sense that they were immersed in a fully 3D, imaginary world. Continuous improvements in this technology allow us to not only imagine a digital space but also to interact with it via haptics and to manipulate this space to test process control improvements in real time.

Remote Sensing – 1973

The origins of this technology go all the way back to the 40’s, when radio-frequency transponders were used to identify friendly aircraft in wartime. Now, both active and passive systems are used in countless devices for security access, inventory control, shipment tracking, anti-theft and more. Billions of such RFID tags have now created the ever-expanding Internet of Things.

Cloud Computing – 2000

Tim Berners-Lee released the software architecture for the public Internet in 1991, but it wasn’t until 2000 that private companies like Amazon and IBM began to offer commercial services to leverage this massively distributed and decentralized resource as an IT solution. Now shared computing power saves resources, is location-independent, and offers enhanced mobile data security.

Smart factories use sensors, big data and automated equipment to immediately respond to constantly changing conditions and demands. Michigan CNC Machining Parts, Inc. is working to be at the forefront of these developments offer the smartest, leanest and fastest manufacturing solutions in the industry. Learn more when you upload your CAD files for a free design quotation at

Chris Williams is the Content Editor at Michigan CNC Machining Parts, Inc.. He is passionate about writing and about developments in science, manufacturing and related technologies. He is also a certified English grammar snob.